Dynaconf: A Comprehensive Guide to Configuration Management in Python

Reading time: 5 – 8 minutesDynaconf is a powerful configuration management library for Python that simplifies the process of managing and accessing application settings. It offers a wide range of features, including support for multiple configuration sources, environment variables, and dynamic reloading.

Main features:

- Multiple configuration sources: Dynaconf can read settings from various sources, including environment variables, files, and even Vault servers. This flexibility allows you to centralize your configuration management and adapt to different deployment environments.

- Environment variable support: Dynaconf seamlessly integrates with environment variables, making it easy to inject configuration values from your system or containerized environments.

- Dynamic reloading: Dynaconf can dynamically reload configuration changes without restarting your application, ensuring that your application always reflects the latest settings.

Supported configuration file formats:

Dynaconf supports a variety of configuration file formats, including:

- TOML (recommended)

- YAML

- JSON

- INI

- Python files

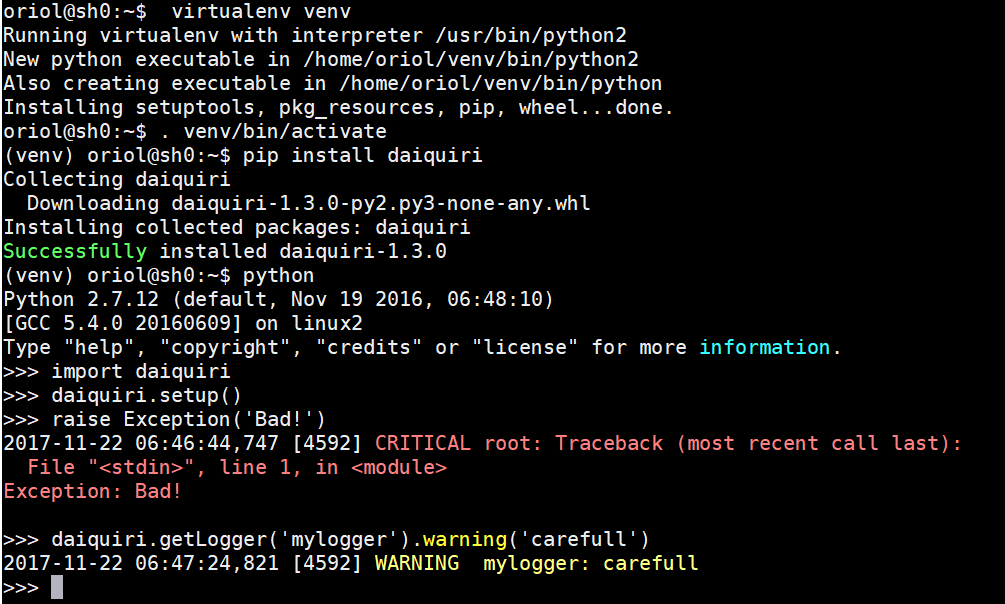

Installation:

Dynaconf can be installed using either pip or poetry:

pip install dynaconf

# or

poetry add dynaconfHow to initialize Dynaconf

Dynaconf is a powerful configuration management library for Python that simplifies the process of managing and accessing application settings. It offers a wide range of features, including support for multiple configuration sources, environment variables, and dynamic reloading.

To initialize Dynaconf in your project, run the following command in your project’s root directory:

dynaconf init -f toml

This command will create the following files:

config.py: This file imports the Dynaconf settings object.settings.toml: This file contains your application settings..secrets.toml: This file contains your sensitive data, such as passwords and tokens.

config.py

The config.py file is a Python file that imports the Dynaconf settings object. This file is required for Dynaconf to work.

Here is an example of a config.py file:

import dynaconf

settings = dynaconf.settings()

settings.toml

The settings.toml file contains your application settings. This file is optional, but it is the recommended way to store your settings.

Here is an example of a settings.toml file:

[default]

debug = false

host = "localhost"

port = 5000

secrets.toml

The .secrets.toml file contains your sensitive data, such as passwords and tokens. This file is optional, but it is recommended to store your sensitive data in a separate file from your application settings.

Here is an example of a .secrets.toml file:

[default]

database_password = "my_database_password"

api_token = "my_api_token"

Importing and using the Dynaconf settings object

Once you have initialized Dynaconf, you can import the settings object into your Python code. For example:

from config import settings

You can then access your application settings using the settings object. For example:

setting_value = settings.get('KEY')

Dynaconf also supports accessing settings using attributes. For example:

setting_value = settings.KEY

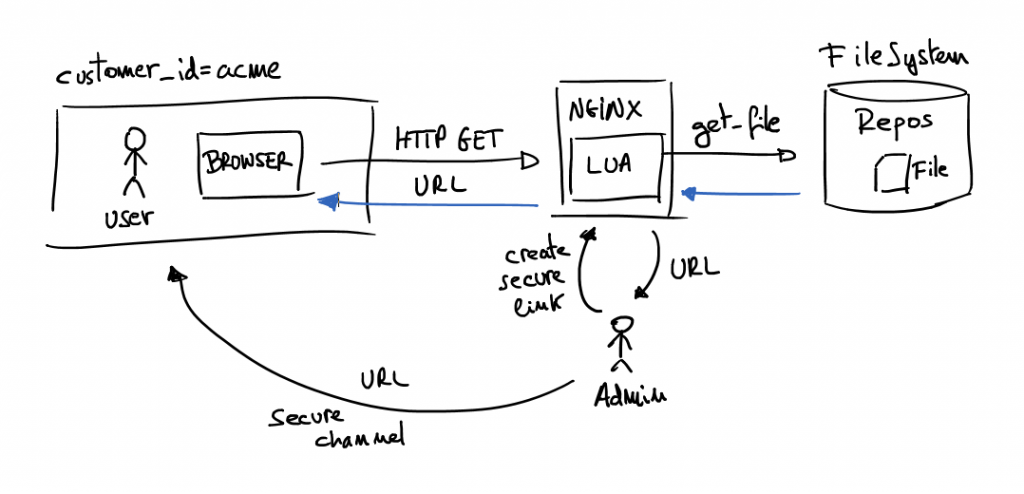

Importing environment variables in Docker-Compose:

When using Dynaconf with Docker-Compose, the best strategy for importing environment variables is to define them in your docker-compose.yml file and then access them using Dynaconf’s envvar_prefix setting.

version: "3.8"

services:

app:

build: .

environment:

- APP_DEBUG=true

- APP_HOST=0.0.0.0

- APP_PORT=8000

In your Python code, you can access these environment variables using Dynaconf:

import dynaconf

settings = dynaconf.settings(envvar_prefix="APP")

debug = settings.get("DEBUG")

host = settings.get("HOST")

port = settings.get("PORT")

Conclusion:

Dynaconf is a versatile and powerful configuration management library for Python that simplifies the process of managing and accessing application settings. Its comprehensive feature set and ease of use make it an ideal choice for a wide range of Python projects.

This week I finished

This week I finished