2015/12/23

1 comentari

Reading time: 4 – 7 minutes

Introduction

Main target of this post is describe how to organize flash partitions and how to modify default OpenWRT boot sequence to support a flexible and powerful rescue mode for Raspberry PI based projects. Just to clarify the explaination. When OpenWRT is build on a flash card for Raspberry, there are only two partitions.

The first one is vFat partition with kernel, firmware and other configuration files; the second one is a ext4 partition with root filesystem. Boot sequence loads the kernel and then mount root partition and run the init script. If ext4 filesystem is corrupted or could not be mounted boot sequence is stoped and there is no solution without extracting the flash card.

Features

In this blog entry I’m going to describe a partition table and boot sequence strategy to avoid this kind of problems. Of course, there are other solutions to get similar results but I think this one is simple and powerful at the same time.

Summarizing features of this solution:

- reduce risk when using intensive writing app

- reduce damage risk on flash memories

- fail-safe mode pressing a button

- support application upgrades using opkg packages

- support operative system upgrades using opkg packages

This solution proposal assume:

- wear leveling protection solved by flash card

- button connected to GPIO pins

The idea

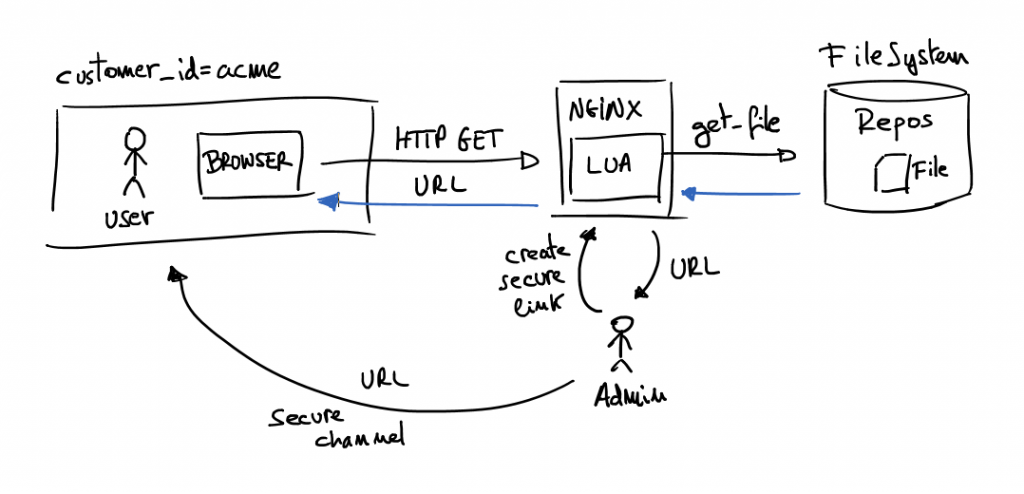

Raspberry PI requires a vfat partition as its first flash partition where there are several required files for booting process, this is a bootloader substitution. For example, in that partition there are files like: start*.elf and bootcode.bin which are the GPU firmware and bootloaders. Another key file is kernel.img; this is the kernel used for booting. Bootloader parameters for kernel booting are in a file called cmdline.txt and firmware parameters are set in config.txt.

At this point the most important think to take into account is kernel.img file and cmdline parameters. Because kernel is loaded and executed by default with cmdline parameters set. When kernel boot process finishes root filesystem and init process sequence will be figured out from cmdline parameters.

At this point take a look on proposed partition table could be useful: (spaces are just as a reference, use what you need)

p1 - vfat (~50MB)

p2 - ext4 - operative system base (read-only) (~150MB)

p3 - ext4 - operative system (read-write) (~250MB)

p4 - logical partition

p4.1 - ext4 - your_application files (usually read-only)

p4.2 - ext4 - your_application data (usually read-write)

Fail-safe boot process key is partition p2 where a minimal OpenWRT installation with a modified init sequence is found. Main idea here is detect if a GPIO shortcut is done, usually this is done just pressing a physical button and you can interact with the user emitting some beep, for example, you can tell the user when you are waiting for button press using a beep and then emit two beeps when button press is detected or nothing if no button is pressed in 3 seconds. Finally the idea is detect if you need a regular boot or a fail-safe boot.

My suggestion for minimal OpenWRT is a small footprint installation of OpenWRT without kernel modules, just the monolitic kernel loaded. Then reduce init sequence to the minimum and add fail-safe logic (GPIO button capture); if button is pressed stop boot sequence and give a shell to the user. Regular way will be invoke init file of the rootfs (p3 in the partition table).

I think the idea is simple and the complexity is reduced in two parts both of them are the init file. To be more precise the p2 partition table has its own init file and p3 the other one. p2 init file load the minimum hardware to control button and give rescue environment when it’s needed. And p3 init file mounts read-write partition and the regular filesystem with regular boot processes and all kind of stuff that you need.

Final notes

I know this is not a very practical post, but my intention is only share some ideas that I have in mind. I spend most of my time designing architectures and I think this is a very powerful architecture of a boot sequence for some professional projects based on Raspberry PI and OpenWRT.

The best way to do what I describe in this post is putting p2 in a initrd file which is referenced in kernel parameters. Because then all read-only system is a RAM partition and rootfs init file has the PID 1 dropping dual-init file complexity. But I decided to modify this part because in the past I had some problems creating initrd files specially when required space for that partition is bigger than RAM. Anyway it’s important to take in account that initrd files has the same purpose as the proposed p2 partition.

Useful links